Master LLM Pentesting: AI Security Pro 2024

Focused View

1:53:14

1 - Introduction.mp4

01:16

2 - What is LLM and Its Architecture.mp4

07:57

3 - LLM Security.mp4

03:15

4 - Data Security.mp4

07:08

5 - Model Security.mp4

05:22

6 - Infrastructure Security.mp4

01:35

7 - Ethical Considerations.mp4

01:51

8 - LLM Owasp Top 10.mp4

19:46

9 - Exploiting LLM APIs with excessive agency.mp4

15:48

10 - Exploiting vulnerabilities in LLM APIs.mp4

16:53

11 - Indirect prompt injection.mp4

20:14

12 - Exploiting insecure output handling in LLMs.mp4

07:54

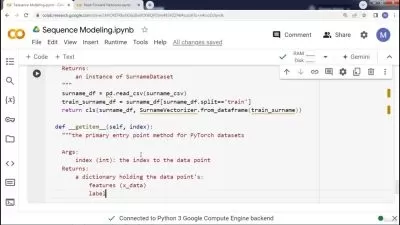

13 - Input Sanitization Techniques.mp4

01:18

14 - Model Guardrails and Filtering.mp4

02:57

More details

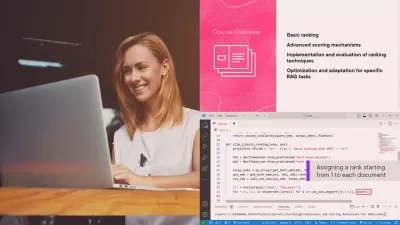

Course Overview

This hands-on course equips you with advanced techniques to test and secure Large Language Models (LLMs). Covering OWASP Top 10 vulnerabilities, ethical hacking methods, and defensive strategies, it prepares you for real-world AI security challenges.

What You'll Learn

- Exploit and defend against LLM vulnerabilities like prompt injection and API exploits

- Implement OWASP-recommended security measures for AI systems

- Build robust guardrails and sanitization techniques for production LLMs

Who This Is For

- Cybersecurity professionals expanding into AI security

- ML engineers responsible for model security

- Ethical hackers exploring LLM vulnerabilities

Key Benefits

- Interactive hacking exercises including LLM attack simulations

- Real-world case studies of major LLM security breaches

- Future-proof skills for evolving AI threat landscape

Curriculum Highlights

- LLM Architecture & Security Fundamentals

- Offensive Techniques: Hacking LLM Systems

- Defensive Strategies: Securing AI Models

Focused display

Category

- language english

- Training sessions 14

- duration 1:53:14

- English subtitles has

- Release Date 2025/04/26